Eight Glitchy Things

AI agents that can pay, sad sandwich bots, broken TEEs, and more

PSA: This is the first edition of Glitchy Things, a news-focused digest we plan to send regularly in addition to our work featuring original journalism and event content. We hope you enjoy it!

The speed of technological evolution is causing familiar systems—from government to finance to journalism—to glitch. The resulting noise makes it tough to connect the dots. We hope this digest helps.

A developer of a Bitcoin privacy tool was sentenced to five years in prison. In July, Keonne Rodriguez pleaded guilty to operating an unlicensed money transmitting business. They did so under a section of the US criminal code that the Department of Justice has interpreted to mean that if one “knowingly” facilitates the transfer of criminal funds, they can be charged with operating an unlicensed money transmitting business—even if what’s actually facilitating the transfer is software that never takes control of user funds. (We’ve been documenting this confusing interpretation, which is also the basis for the prosecution of Tornado Cash developer Roman Storm, all year.)

Rodriguez, whose lawyers had requested a sentence of one year and one day, submitted a letter appealing for leniency from Judge Denise Cote of the Southern District of New York. But Cote “appeared extremely skeptical of any claim to Samourai Wallet’s usefulness for non-criminal ends or general privacy,” David Z. Morris reported for The Rage. Rodriguez’s co-developer, William Lonergan Hill, is scheduled to be sentenced next week.

Are the words of an AI chatbot “speech”? The family of a 14-year-old who committed suicide has brought a wrongful death and negligence lawsuit against Character.AI, the firm behind a chatbot with which the teen had developed a romantic relationship. The factors here are sensitive and complex, and we recommend reading the comprehensive The New York Times Magazine piece on it. One thing that immediately stands out, though, is Character.AI’s defense. As the piece explains:

This kind of negligence suit comes into U.S. courtrooms every day. But Character.AI is advancing a novel defense in response. The company argues that the words produced by its chatbots are speech, like a poem, song or video game. And because they are speech, they are protected by the First Amendment. You can’t win a negligence case against a speaker for exercising their First Amendment rights.

According to the NYT Mag (citing Helen Norton, a law professor at the University of Colorado), this is “very likely the first time the courts have confronted a ‘nonhuman speaker’ in a wrongful death case.” But it’s far from the last. “These disputes are going to come fast and furious as AI capabilities evolve,” Norton said.

Fake people fanned the flames of online outrage following Cracker Barrel’s logo change. A company that Cracker Barrel hired to analyze social media posts found that “32% to 37% of the online activity criticizing Cracker Barrel in the days after its logo announcement was fueled by fake accounts,” the Wall Street Journal reports. The research did not determine the real people behind the fake ones. But according to the WSJ, the bots amplified a number of X posts from the holdings of one investor in Cracker Barrel named Sardar Biglari, who also owns Steak ‘n Shake and the media brand Maxim. Biglari denied involvement, telling the WSJ that the bot story is just part of a “roulette wheel of excuses” from Cracker Barrel.

What’s in your (agent’s) wallet? If little AIs are going to run around the internet carrying out their masters’ orders, they’re not going to get far unless they can buy stuff. The question is, how are agent payments going to work? Back in September, crypto exchange Coinbase and Cloudflare, the web content delivery services company, announced they’d teamed up to start trying to answer that question with the x402 protocol. The idea behind x402 is to create a way for agents to be able to request an item of value—whether that’s the latest Shein fit, a new bunch of inference tokens, or whatever—from an online seller, get a “402: Payment Required” response, and then be able to submit details to complete the transaction (the 402 response is already embedded in HTTP, similar to its more famous sibling, “404: Not Found”). The framework includes a cryptographic signature that proves the agent making the purchase has been authorized to use the resources necessary to complete the transaction.

Building on that idea, Coinbase released a tool late last month called Payments MCP that’s meant to allow AI agents to access cryptocurrency wallets. As the US’s largest crypto exchange and creator of the popular Ethereum Layer 2 Base, it’s pretty clear where Coinbase’s interests lie—legions of agents doing business onchain could be very good for business. But x402 isn’t being built to be crypto-specific; as Cloudflare’s blog post describing plans for x402 says, “Future versions of x402 could be agnostic of the payment rails, accommodating credit cards and bank accounts in addition to stablecoins.”

Of the $864 million the Trump Organization’s income in the first half of 2025, $802 million came from the family’s crypto businesses. That’s according to an investigation by Reuters. It’s also compared to just $51 million in revenue a year earlier. The report added that more than half of the Trumps’ income came from sales of World Liberty Financial tokens.

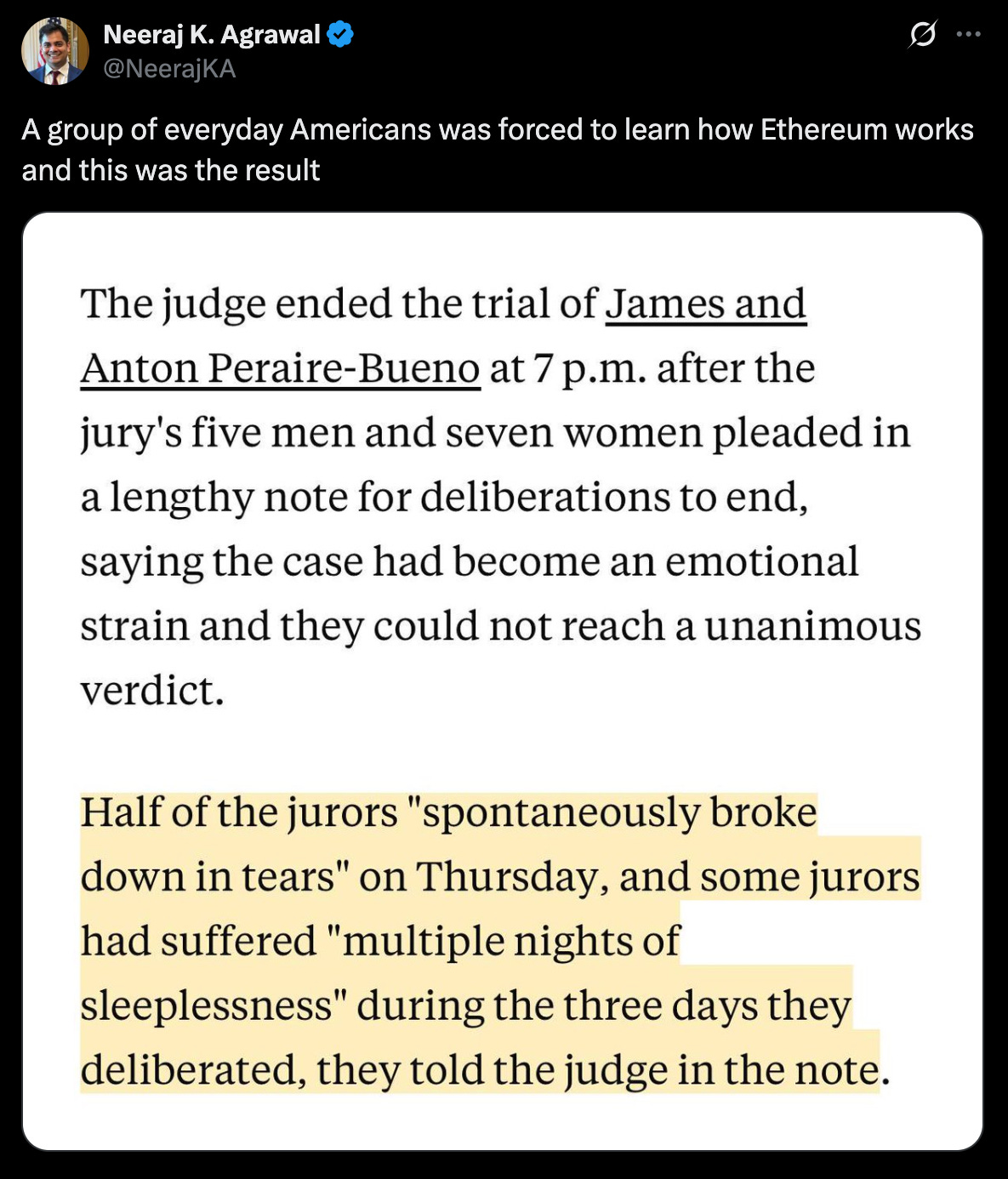

The crypto case that reduced jurors to tears might get a redo. Two brothers made $25 million in 12 seconds by exploiting Ethereum “MEV” bots. Was it a crime? Unclear—a federal prosecution ended in a mistrial last week after a jury was emotionally overwhelmed by the case.

Prosecutors have said they want another trial. If that happens, a fresh set of jurors will need to try and grasp the inner workings of the Ethereum network, specifically the process by which new transactions are added to blocks and published to the chain. There’s a lot of money to be made in this process, and this potential profit is called maximal extractable value, or MEV. Ethereum has a complicated system aimed at keeping the competition for this money fair. The game has three main players: 1. Searchers, who use bots to scan the queue of pending transactions for opportunities to make money by sequencing the transactions in specific ways. Searchers propose transaction bundles to 2. builders, who use them to build the most profitable blocks possible, and then offer a cut of the profit to 3. validators, which are responsible for adding new blocks to the chain.

The vast majority of validators use open source software called MEV-Boost, developed by Flashbots, to interact with builders. The two brothers, James and Anton Peraire-Bueno, operated validators. They had found a vulnerability in MEV-Boost that gave them more insight into the content of proposed blocks than the system is supposed to allow. With that insight, they changed a block’s transaction sequence in a way that, as explained in a postmortem published at the time by Flashbots, “effectively stole” money from a bot that had proposed the original sequence. The brothers were charged with conspiracy to commit wire fraud, wire fraud, and conspiracy to commit money laundering. But the jury in their trial couldn’t reach a unanimous decision on the charges, and the judge declared a mistrial.

ICE and CBP agents are scanning people’s faces on the street to verify citizenship. That’s the headline from 404 Media, which reviewed multiple videos showing that the agencies are “actively using smartphone facial recognition technology in the field.”

Trusted execution environments (TEEs) maybe shouldn’t be so trusted. Researchers pulled off physical attacks on all three of the most popular confidential computing devices on the market. This one is technical as hell, but it matters because TEEs are so prevalent. The hardware, used in cloud computing, AI finance, defense, and even blockchain systems, is supposed to keep data and computations private, even from an attacker who has taken root-level control of the system. “It’s hard to overstate the reliance that entire industries have on three TEEs in particular: Confidential Compute from Nvidia, SEV-SNP from AMD, and SGX and TDX from Intel,” writes Dan Goodin of Ars Technica. That’s problematic, Goodin says, because researchers have now shown that all three can be broken via inexpensive physical attacks. He explains:

The low-cost, low-complexity attack works by placing a small piece of hardware between a single physical memory chip and the motherboard slot it plugs into. It also requires the attacker to compromise the operating system kernel. Once this three-minute attack is completed, Confidential Compute, SEV-SNP, and TDX/SDX can no longer be trusted.

The researchers behind the attacks, which they have collectively named TEE.fail, have published a paper and a website describing their work.